Facebook has announced that it will introduce new ways to notify users when they interact with content that has been rated by a fact-checker, and the social media giant will also take stronger action against people who repeatedly share misinformation on the platform.

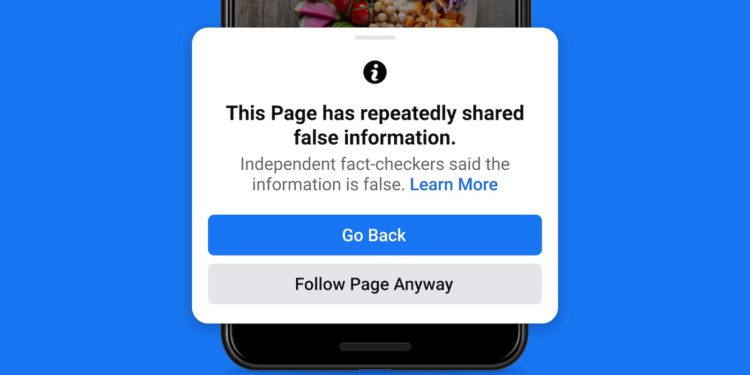

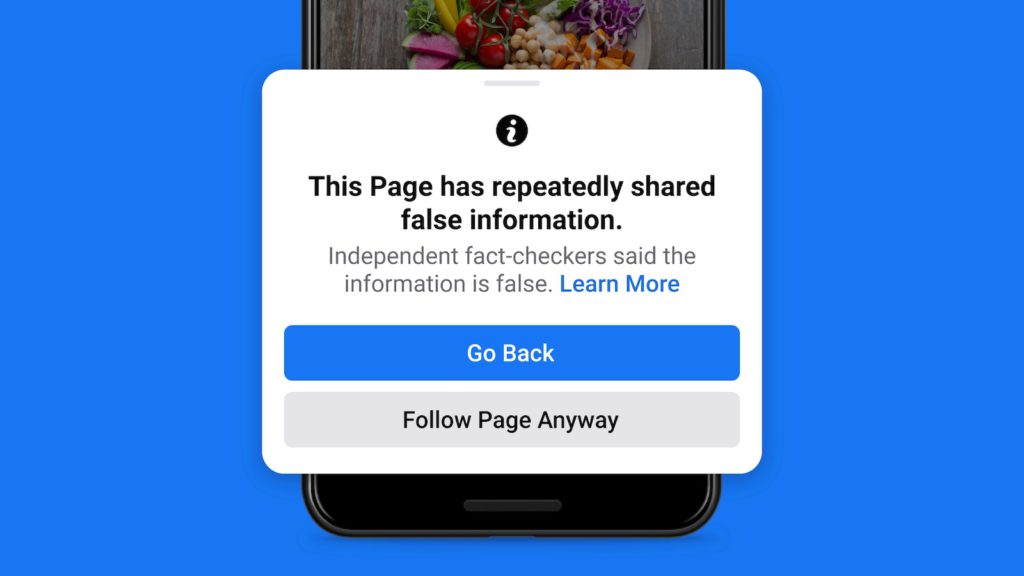

Before a user likes a Page that has repeatedly shared misinformation, Facebook will display a pop-up to warn the user and indicate what fact-checkers have said about some posts shared by the Page that contain false information.

Whether it's false or misleading content about COVID-19 and vaccines, climate change, elections, or other issues, we're working to reduce the amount of misinformation people see on our apps.

Against fake news: Facebook wants to crack down harder

Facebook saysthat it will also expand penalties for individual accounts. Starting today, the platform will reduce the distribution of all posts in the News Feed from a person's Facebook account when they repeatedly share content that has been rated by one of its fact-checking partners. The company also announced a redesign of notifications when people share fact-checked content. The notification will include the fact-checked article that refutes the claim, as well as a prompt to share the article with one's followers. It will also include a notice that people who repeatedly share false information may have their posts pushed down in the News Feed, making them less likely for other users to see. Recently, the platform started implementing a "Read before you share" pop-up to test. With a similar approach to Twitter, Facebook has started suggesting that users read an article before sharing it. (Image: Facebook)