Apple has now officially announced plans to integrate a number of features aimed at protecting children online, including a system that can detect child abuse material in iCloud while maintaining user privacy.

The tech giant from Cupertino quit announced new child safety features in three areas on Thursday evening to help protect children and limit the spread of “Child Sexual Abuse Material” (CSAM). The official announcement came just hours later after rumors documented that Apple wants to introduce some kind of system to contain CSAM on its platforms.

At Apple, our purpose is to create technology that empowers people and enriches their lives – while helping them stay safe.

New system uses cryptographic techniques

For example, Apple will implement new tools in Messages that will allow parents to be more informed about how their children are communicating online. The company is also deploying a new system that uses cryptographic techniques to detect collections of CSAM stored in iCloud Photos and share information with law enforcement. Apple is also working on new security tools in Siri and Search. John Clark, CEO and President of the National Center for Missing & Exploited Children, said:

With so many people using Apple products, these new safeguards have life-saving potential for children who are being lured online and whose horrific images of child sexual abuse are being circulated. At the National Center for Missing & Exploited Children, we know that the only way to combat this crime is to stand firm in our commitment to protecting children. We can only do this because technology partners, like Apple, are stepping up and making their commitment known. The reality is that privacy and child protection can coexist. We applaud Apple and look forward to working together to make this world a safer place for children.

All three features have also been optimized for privacy, ensuring that Apple can share information about criminal activity with the right authorities without compromising the private information of law-abiding users. Here's an overview.

CSAM detection in iCloud Photos

The main new child safety feature Apple plans to implement focuses on detecting CSAM within iCloud Photos. If Apple discovers collections of CSAM stored in iCloud, the company will flag that account and share information with the NCMEC, which acts as a reporting center for child abuse material and works with law enforcement agencies across the U.S. But Apple isn't actually scanning every single image here. Instead, it's using the information on the device to check for CSAM against a known database of hashes provided by the NCMEC and other child protection organizations.

iCloud Photos: After automatic marking, a manual check is carried out

This database is converted into an unreadable set of hashes that are stored securely on the user's device. The actual method for detecting CSAM in iCloud Photos is complicated and uses cryptographic techniques at every step to ensure accuracy while maintaining the average user's privacy. Apple says a flagged account will be deactivated after a manual review process to ensure it is a true positive case. After an account is deactivated, the Cupertino company will notify the NCMEC. Users have the option to appeal the suspension if they feel they have been falsely accused. The company stresses that the feature only detects CSAM stored in iCloud Photos.

Apple: The tool only scans for CSAM in iCloud Photos

It won't apply to photos stored solely on the device. Additionally, Apple claims the system has a failure rate of less than one in a trillion accounts per year. Also, rather than cloud-based scanning, the feature only reports users who have a collection of known CSAM stored in iCloud. So a single image isn't enough to trigger the feature, which helps reduce the rate of false positives. Again, Apple says it will only be notified of images that match known CSAM. It doesn't scan every image stored in iCloud and won't receive or view images that don't match known CSAM. The CSAM detection system will initially only apply to iCloud accounts in the US, but the company says it will likely roll out the system on a wider scale in the future.

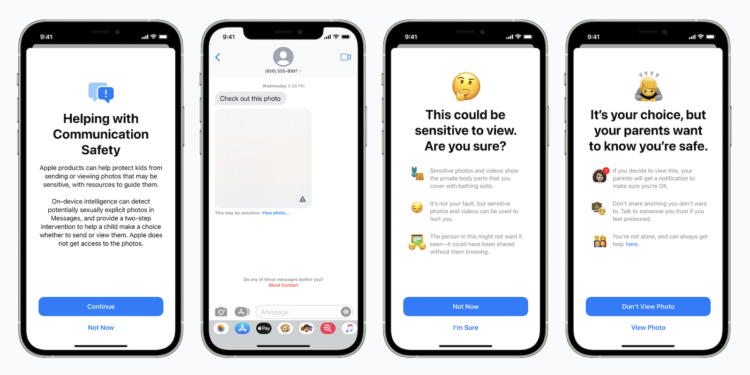

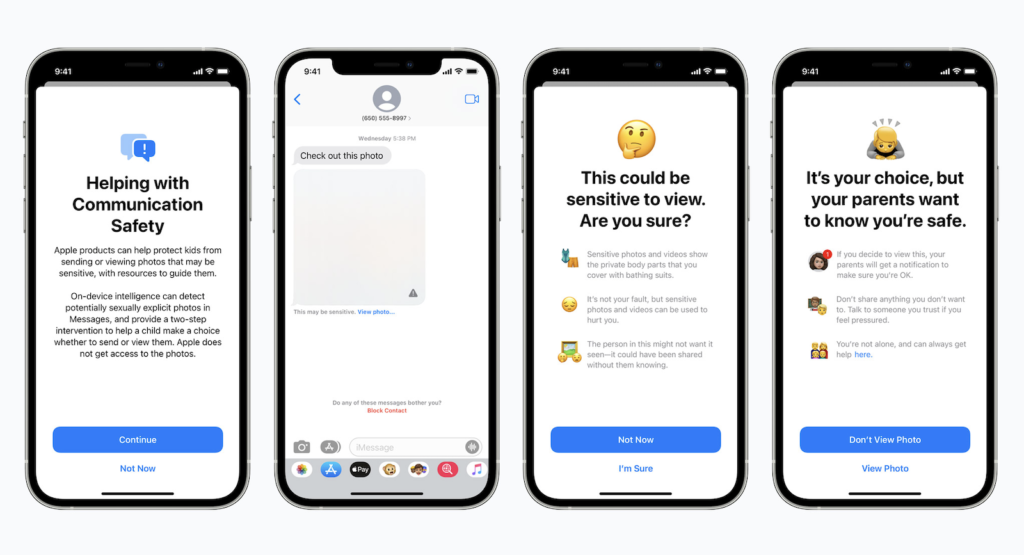

iMessage: Secure communication in iMessage

A second new feature focuses on increasing the safety of children communicating online through Apple's iMessage. For example, the iMessage app will now display warnings for children and parents when they receive or send sexually explicit images. When a child receives a sensitive image, it will automatically be blurred and the child will be presented with helpful resources. Apple has also built in a mechanism to let children know that a message will be sent to their parents if they do view the image. The system uses on-device machine learning to analyze images and determine whether they are sexually explicit. It is designed so that Apple does not receive a copy of the image.

Siri & Search will also be adjusted

In addition to the iMessage safety features, Apple is also expanding the tools and resources it offers in Siri & Search when it comes to keeping children safe online. For example, iPhone and iPad users will be able to ask Siri how to report CSAM or Child Exploitable, and Siri will then provide the appropriate resources and instructions.

Apple: Privacy is protected

Apple has long touted its commitment to protecting user privacy. The company has even clashed with law enforcement over users' privacy rights. For this reason, the introduction of a system designed to share information with law enforcement has worried some security experts. However, Cupertino claims that surveillance and abuse of the system was a "primary concern" during development. Every feature has been designed to protect privacy while combating CSAM, or child exploitation online, according to Apple.

CSAM detection system can only detect corresponding material

The CSAM detection system was designed from the start to only detect CSAM - it does not include mechanisms to analyze or detect other types of photos. It also only detects collections of CSAM above a certain threshold. Encryption is also unaffected by the detection system. Despite all this, security experts are still concerned about the potential impact. Matthew Green, a professor of cryptography at Johns Hopkins University, notes that the hashes are based on a database that the user cannot see. In addition, there is the possibility that hashes can be abused - for example, if a harmless image shares a hash with known CSAM. He writes:

The idea that Apple is a privacy company has gotten them a lot of good press. But it's important to remember that this is the same company that doesn't encrypt your iCloud backups because of pressure from the FBI.

The new features will be introduced in iOS 15, iPadOS 15, watchOS 8 and macOS 12 Monterey this fall. As mentioned above, they are initially limited to the US. (Image: Apple)