Apple today participated in a question-and-answer session with reporters regarding its new parental controls. During the questioning, Apple confirmed that the company is open to extending the features to third-party apps in the future.

For example, Apple will implement new tools in Messages that will allow parents to be better informed about how their children communicate online. Pursue is also deploying a new system that uses cryptographic techniques to detect collections of CSAM stored in iCloud Photos and share information with law enforcement. Apple is also working on new security tools in Siri and Search. As a reminder:

CSAM detection in iCloud Photos

The main new child safety feature Apple plans to implement focuses on detecting CSAM within iCloud Photos. If Apple discovers collections of CSAM stored in iCloud, the company will flag that account and share information with the NCMEC, which acts as a reporting center for child abuse material and works with law enforcement agencies across the U.S. But Apple isn't actually scanning every single image here. Instead, it's using the information on the device to check for CSAM against a known database of hashes provided by the NCMEC and other child protection organizations.

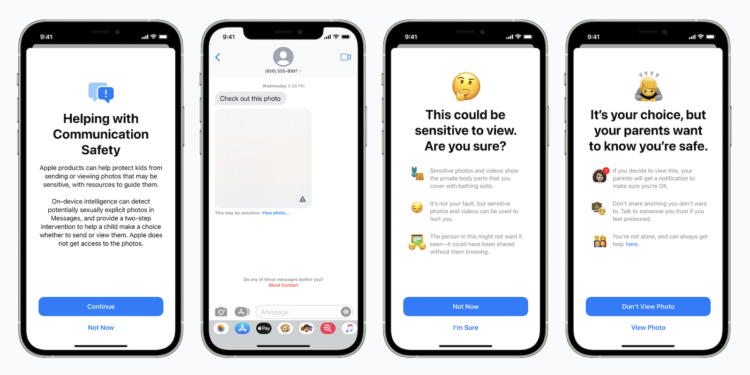

iMessage: Secure communication in iMessage

A second new feature focuses on increasing the safety of children communicating online through Apple's iMessage. For example, the iMessage app will now display warnings for children and parents when they receive or send sexually explicit images. When a child receives a sensitive image, it will automatically be blurred and the child will be presented with helpful resources. Apple has also built in a mechanism to let children know that a message will be sent to their parents if they do view the image. The system uses on-device machine learning to analyze images and determine whether they are sexually explicit. It is designed so that Apple does not receive a copy of the image.

Siri & Search will also be adjusted

In addition to the iMessage safety features, Apple is also expanding the tools and resources it offers in Siri & Search when it comes to keeping children safe online. For example, iPhone and iPad users will be able to ask Siri how to report CSAM or Child Exploitable, and Siri will then provide the appropriate resources and instructions.

Expansion to third-party apps

Now Apple has told reporters that while it has nothing to announce today, extending parental controls to third-party providers would be a desirable goal to provide even more comprehensive protection for users. Apple did not give any specific examples. But one possibility would be that the communication safety feature could be made available for apps like Snapchat, Instagram or WhatsApp, so that sexually explicit photos a child receives are blurred. Another option is that Apple's well-known CSAM detection system could be extended to third-party apps that upload photos elsewhere than iCloud Photos. The Cupertino-based company did not give a timeframe for when parental controls could be extended to third-party providers, as the company has yet to complete testing and deployment of the features. Apple explained that it would have to ensure that any expansion would not undermine the privacy properties or effectiveness of the features. (Image: Apple)