Apple has now published a document that provides a more detailed overview of the parental controls that were first announced two weeks ago.

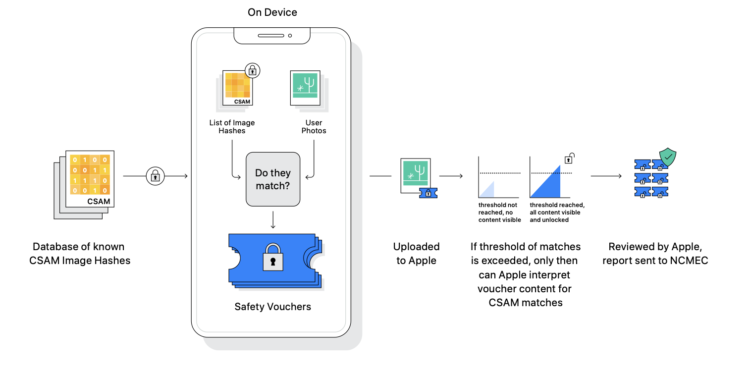

Apple's plan to detect known images of child sexual abuse material (CSAM) stored in iCloud Photos is particularly controversial and has raised concerns among some security researchers, the nonprofit Electronic Frontier Foundation and others that the system could potentially be abused by governments as a form of mass surveillance. The new document aims to address these concerns and reiterates some details previously disclosed in an interview with Apple's software engineering chief Craig Federighi. For example, an iCloud account must have at least 30 known CSAM images before a manual review is triggered.

iCloud Photos: Apple explains how the database works

Apple also said that the on-device database of known CSAM images only includes entries independently submitted by two or more child welfare organizations operating in separate, sovereign jurisdictions and not under the control of the same government.

The system is designed so that a user does not need to trust Apple or any other single organization, or even a number of potentially collaborating organizations from the same jurisdiction (i.e. under the control of the same government), to be confident that the system will work as advertised. This is achieved through several interlocking mechanisms, including the inherent verifiability of a single software image distributed globally for execution on the device, a requirement that all perception image hashes contained in the encrypted CSAM database on the device be independently provided by two or more child protection organizations from different sovereign jurisdictions, and finally a human verification process to prevent erroneous reports.

Apple added that the company will publish a support document on its website that contains a root hash of the encrypted CSAM hash database included in every version of every Apple operating system that supports this feature.

Apple employees in retail should use FAQ

Additionally, Apple said users will be able to check the root hash of the encrypted database on their device and compare it to the expected root hash in the support document, but no timeframe was given for this. In a memo leaked to Bloomberg's Mark Gurman, Apple said it will also have the system reviewed by an independent auditor. The memo notes that Apple retail employees may receive questions from customers about the parental controls and refers to a FAQ, which Apple released earlier this week as a resource that employees can use to answer questions and provide customers with more clarity and transparency. (Image: Apple)