Apple's recent announcement about child protection in relation to the rating of iCloud photos and iMessage notifications has caused a lot of discussion around the world. In addition to security researchers and other observers, normal users like you and me are of course also debating. But there is something that we all need to be aware of. Despite Apple's new feature - which is limited to the USA for now - nothing changes in Apple's data protection guidelines. Below we will examine the most important questions together and try to provide more clarity.

Apple released a new set of tools on August 5, 2021 announcedto help protect children online and reduce the spread of Child Sexual Abuse Material (CSAM). These include new features in iMessage, Siri, and Search, as well as a mechanism that scans iCloud Photos for known CSAM images.

CSAM scan: reactions vary

In addition to fans, data protection and online security experts and other observers have also mixed opinions on Apple’s announcement But interestingly, many people underestimate how widespread the practice of scanning image databases for CSAM really is. Apple is certainly not the first company on the market to do this - it has just attracted more attention. Moreover, Apple is by no means giving up on privacy protection. So, let's get started.

Apple's privacy features

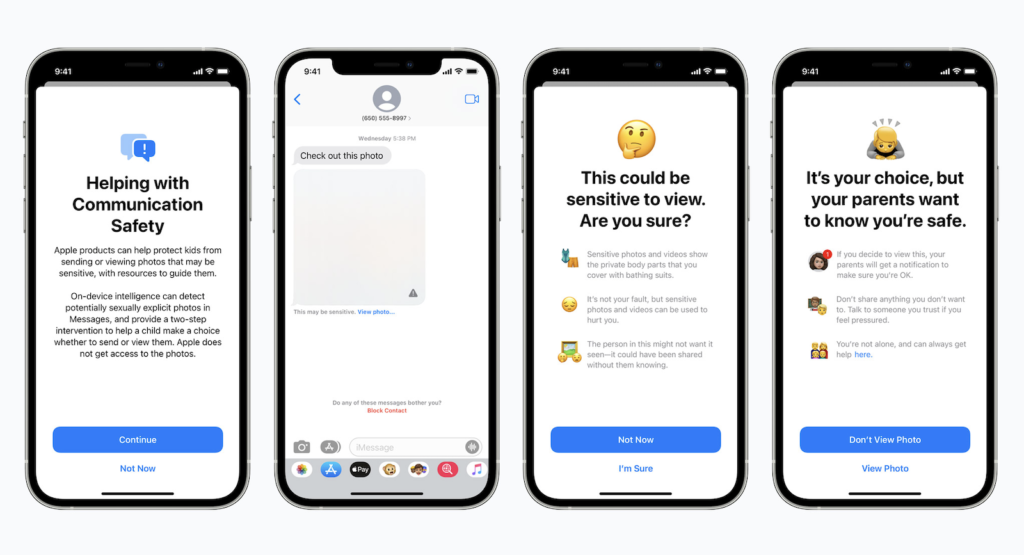

The company's suite of parental controls includes the aforementioned iCloud photo scanning, as well as updated tools and resources in Siri and Search. It also includes a feature designed to flag inappropriate images sent to or from minors via iMessage. As Apple noted in its original announcement, all of the features were designed with privacy in mind. Both the iMessage feature and the iCloud photo scan, for example, leverage the device's intelligence.

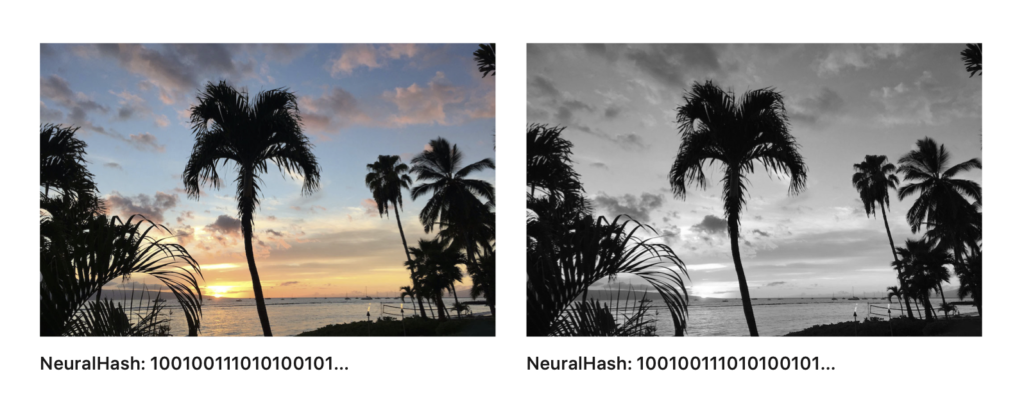

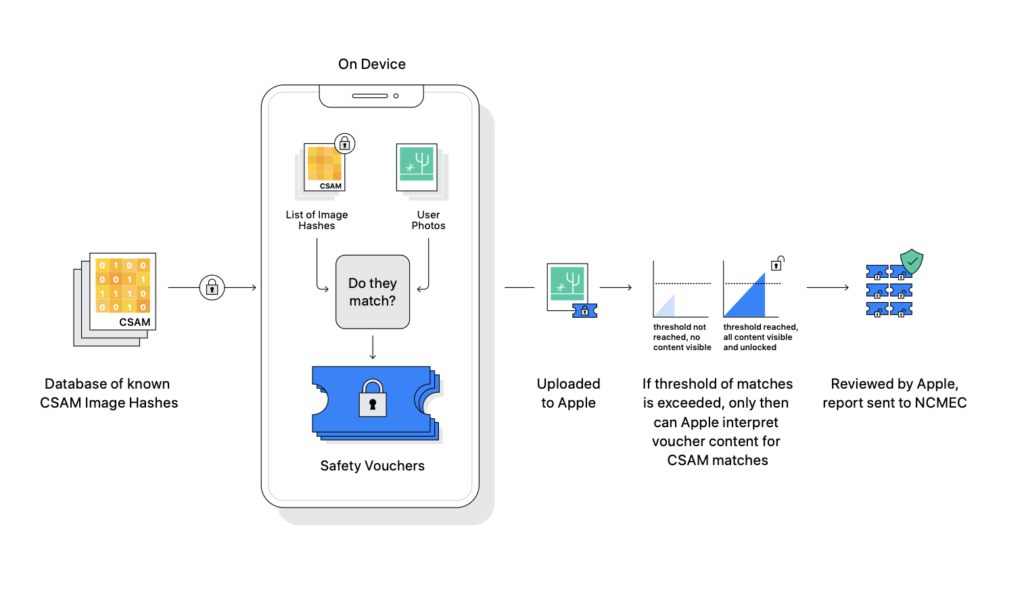

“Scanner” compares mathematical hashes

Additionally, the new iCloud Photos "scanner" doesn't actually scan or analyze the images on the user's iPhone. Instead, it compares the mathematical hashes of known CSAMs with the images stored in iCloud. If a collection of known CSAM images has been stored in iCloud, the account is flagged and subjected to a manual investigation by Apple. Only then, if the match is actually positive, is the account suspended and a report sent to the National Center for Missing & Exploited Children (NCMEC). There are elements in the system that ensure that the error rate is negligible - one in a trillion, in fact. This is due to the aforementioned "detection threshold," which Apple does not want to explain in more detail. Which is of course understandable and a good thing.

New iMessage system is even more privacy-friendly

In addition, we should not forget that the matching is only done in conjunction with iCloud Photos. This means that if a user disables iCloud Photos in the system settings, the system will also remain disabled. The iMessage system is even more privacy-friendly. It only applies to accounts belonging to children and is therefore opt-in, not opt-out. In addition, it does not generate reports that are sent to external parties - only the children's parents are notified that an inappropriate message has been received or sent. The bottom line is that the new features in iCloud Photos and iMessage work completely independently of each other and therefore actually - technically - have nothing to do with each other. Their only commonality is protecting children.

- The iCloud Photos Verifier hashes images without examining them for context and compares them to known CSAM collections and creates a report that is sent to the hash collection manager NCMEC.

- The child account iMessage feature uses on-device machine learning, does not compare images to CSAM databases, and does not send reports to Apple—only to the parent's Family Sharing Manager account.

So let's answer a few important questions on the topic. Let's take the most common questions that have been documented on the Internet so far.

So will Apple scan all my photos?

Apple doesn't scan your photos. It checks the numerical value associated with each photo against a database of known illegal content to see if they match. So the system doesn't see the image, it sees the neural hash. It also only checks images that have been uploaded to iCloud. That means the system can't detect images with hashes that aren't in the database.

Can I deactivate the comparison?

As mentioned above, Apple has told several US media confirmedthat the system can only detect CSAM in iCloud Photos, so if you turn off iCloud Photos, you won't be detected.

iMessage: Can I still send nude photos to my partner?

If you are of legal age, Apple will not flag you. As mentioned above, this mechanism only applies to child accounts and must first be activated - i.e. opt-in. This means that Apple will not stop you from sending nude photos to your partner.

Can I still take pictures of my children in the bathtub?

Here, too, some have misunderstood Apple's new features. As I said, CSAM detection cannot recognize the context of individual images. That is, the mechanism cannot distinguish a rose from a genital organ. (I know, weird comparison!) It does not recognize one or the other. It simply compares the image hashes of the photos stored in iCloud with a database of known CSAM maintained by the NCMEC. In other words, if your children's images are not hashed in some way in the NCMEC database, then they will not be flagged by the iCloud photo scanning mechanism.

Help – I am falsely accused of possessing CSAM

Apple's system is designed in such a way that false positive results occur at a rate of 1:1,000,000,000,000. The chance of being struck by lightning is 1:15,000. Even winning the lottery jackpot is more likely. If this unlikely event occurs, a manual check is initiated at Apple. At the very latest, it should be clear that it is an error. In short: Neither pictures of your children in the bathtub nor naked pictures of yourself will produce such a false positive.

Which countries will receive these features?

Apple's parental controls will initially only be rolled out in the US, but Apple has confirmed that it will consider rolling out these features in other countries after considering legal options. This would suggest that Apple is at least considering a rollout beyond the US.

Which devices are affected?

The new measures will appear on iOS 15, iPadOS 15, watchOS 8 and macOS 12 Monterey, i.e. iPhone, iPad, Apple Watch and Mac. Except for CSAM detection, which will only be available on iPhone and iPad.

When will these new measures come into force?

Apple says all features will be released “later this year,” meaning in 2021. This means the whole thing could go live with the launch of the new software generation or be activated in a later point version.

Data protection concerns: You are of course justified

Despite Apple's explanations and extensive disclosures, many users have privacy concerns. Many cryptographic and security experts are also rather concerned. For example, some experts fear that this type of mechanism could make it easier for authoritarian governments to crack down on abuse. Although Apple's system is only designed to detect CSAM, they fear that repressive governments could force Apple to revise it to also detect dissenting or anti-government messages. For example, prominent digital rights nonprofit the Electronic Frontier Foundation notes that a similar system originally designed to detect CSAM was reworked to create a database of "terrorist" content. Apple has since also commented on this, explaining the following - quote from one of my previous Article:

Apple's new CSAM detection system will be limited to the United States at launch. To avoid the risk that some governments might try to abuse the system, Apple confirmed that the company will consider a possible global expansion of the system after a legal assessment on a country-by-country basis.

Therefore, it is not expected that the feature will ever be available worldwide, but certain markets will remain excluded from it.

Apple's CSAM system is not new

Whenever Apple announces something new, it is discussed worldwide. Unlike many companies, the bitten apple receives a lot of attention. This latest case was no exception. This leads some to believe that the company's new child protection measures are somehow unique. In reality, they are not. As The Verge reported in 2014, Google has been scanning Gmail users' inboxes for known CSAM since 2008. Some of these scans have even led to the arrest of people sending CSAM. Google is also working on flagging CSAM and removing it from search results. But that's not all. Microsoft originally developed the system that the NCMEC uses. The PhotoDNA system, donated by Microsoft, is used to scan image hashes and detect CSAM, even when an image has been altered. And Facebook?

CSAM scanning is now common practice

Well, Facebook started using it in 2011, while Twitter adopted it in 2013. It's also used by Dropbox and similar services. So is Apple a latecomer? In a way, yes. Cupertino has already admitted that it scans for CSAM. In 2019, the company updated its privacy policy to say that it scans for "potentially illegal content, including child sexual exploitation material." Apple has now simply tweaked it to make it better. So while Apple is uniquely positioned as a privacy-respecting company, this type of CSAM scanning is commonplace among internet companies—and for good reason.

Conclusion

While privacy is a fundamental human right, any technology that curbs the spread of CSAM is also inherently good. Apple has managed to develop a system that works toward the latter goal without significantly compromising the privacy of the average person. (Photo by Unsplash / Carles Rabada)