Apple's announcement of new CSAM detection techniques yesterday caused a lot of discussion and, unfortunately, confusion. Contrary to what some people believe, Apple cannot verify images if users have iCloud Photos disabled.

Although the announcement included a comprehensive explanation that answered some questions, Apple's upcoming feature still causes some confusion. Admittedly, it was a lot of input, so I've broken down the update in as much detail as possible based on the information Apple provided. Anyway, below we'll break down the reconciliation process again.

Recognition technology will be introduced under iOS 15 and Co.

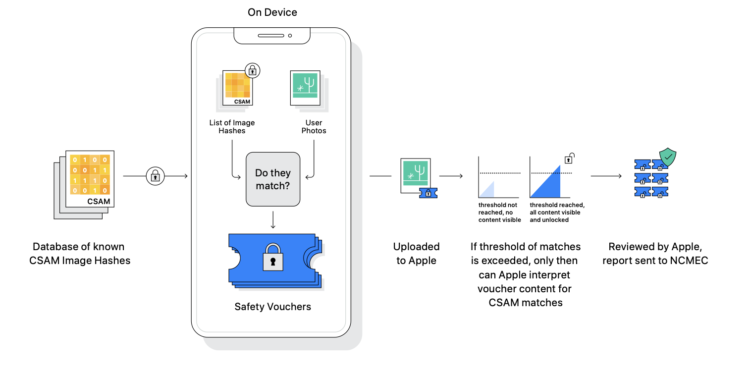

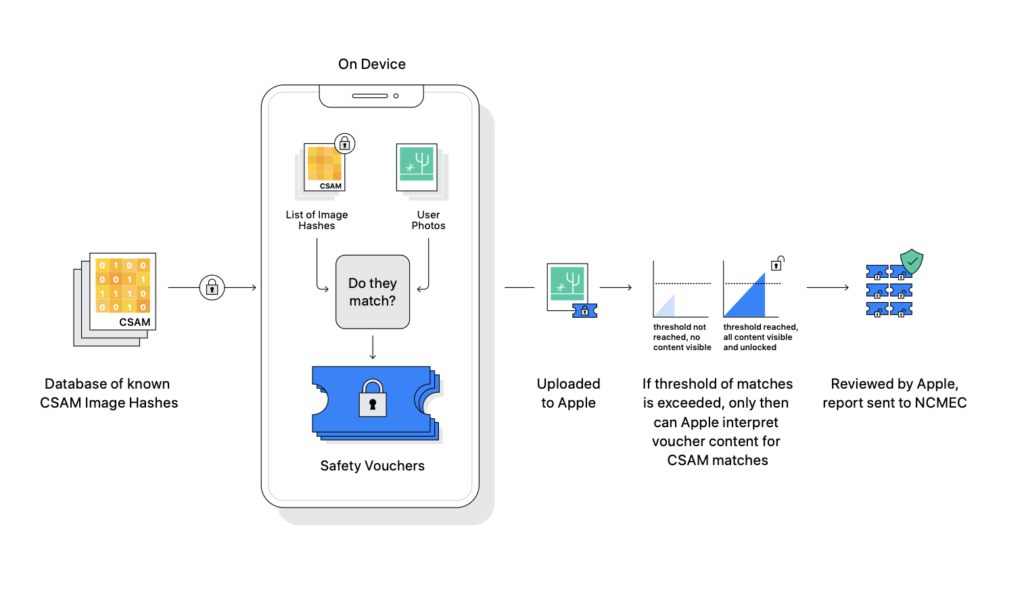

With iOS 15, iPadOS 15, watchOS 8 and macOS 12 Monterey, Apple will load a database of known CSAM (Child Sexual Abuse Material, CSAM) image hashes onto devices that is unreadable to the user, which will compare a user's images. However, the comparison only takes place when the local images are uploaded to the iCloud Photo Library - i.e. immediately before the upload. According to Apple, this is a very accurate method of detecting CSAM and protecting children. Scanning CSAM images is not an optional function and therefore happens automatically.

Against child abuse: Block checks for CSAM content

But it can be deactivated. Apple told MacRumors confirmedthat it cannot detect known CSAM images if the iCloud Photos feature is turned off. The method works by identifying a known CSAM photo on the device and then flagging it before uploading it to iCloud Photos. After a certain number of such certificates (aka flagged photos) have been uploaded to iCloud Photos, Apple gets a direct warning. Only then is a manual review performed. If CSAM content is indeed found, the user account is deactivated and the National Center for Missing and Exploited Children is notified. This American non-governmental organization will then contact law enforcement authorities. By the way, Apple has also confirmed that it cannot detect known CSAM images in iCloud backups if iCloud Photos is disabled on a user's device.

Apple's new child protection features: Security researchers express concern

So if you want to prevent a comparison in principle, you have to deactivate iCloud Photos in the system settings. I would also like to remind you that this new detection technology looks for hashes of known material about child sexual abuse and does not generally scan a user's photo library. This also means that other content is not detected. In addition, only images and not videos are checked. But: Security researchers have already expressed concerns about Apple's CSAM initiative expressed and fear that in the future it may be able to detect other types of content that could have political and security implications. But for now, Apple's efforts are limited to finding child predators. If you want to learn more about Apple's new initiative, check out the article below.

A notice:

The new scanning process will be rolled out in the fall and will initially be limited to the USA. However, it will be expanded in the future. (Image: Apple)